Kubernates

Nod –> VM or phisical Machine

- Pod –> smallest component (one container per pod usually, but can be more) each pod gets an IP (internal, dynamic – K8s virt.net)

- Service –> Fix IP for Pod (internal or external) act as loadbalancer also, use less busy nod

- Ingress –> Entry point for end user

- ConfigMap –> External conf for apps. URLs, IP, ports, credentials

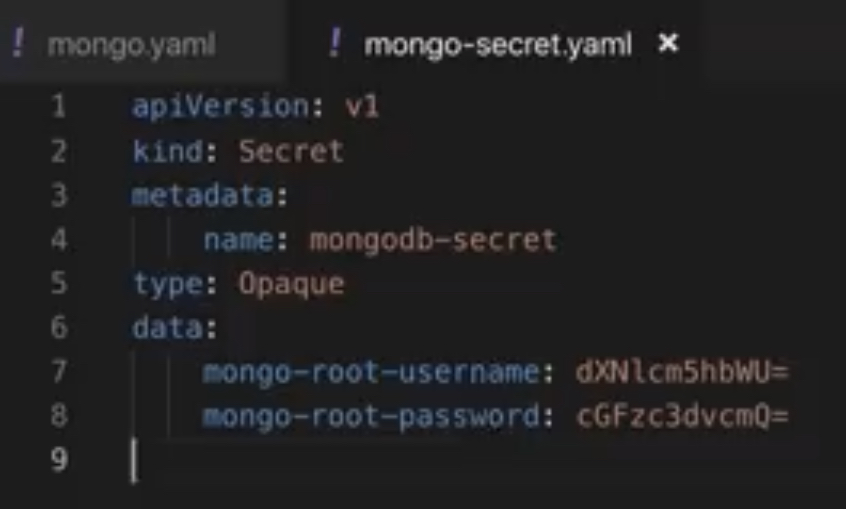

- Secret –> for credentials. base64 encoded

- Volumes –> external phisical storage. Localhost, Cloud, NSF

- Deployment –> blueprints for app pod, how many replicas…

- StatefulSet –> for DB replica sinc (DB are often hosted outside claster)

- basic Architecture….

- 2 tipes of nodes. Master and Slave

- 3 process must run on every worker (slave) nod:

- 1. Container runtime, docker or something else

- 2. Kublet – Kubernati process

- 3. Kube proxy – forwards request

- Master nod runs 4 processes:

- 1. API server: cluster gateway, authentication for deploying..client access, UI, cubectl, cubernati dashboard…etc

- 2. Scheduler… decide on which nod to put the pod (less used)

- 3. Controller manager…detects pod crashing and restarts them (cluster state changes)

- 4. ETCD key value store… cluster state info

minikube – for testing. master and worker nod on single machine.

| minikube start –vm-driver=hyperkit | or docker, Hyper-V,KVM, Parallels, Virtual Box, VMware |

| kubectl get nodes | get status of nodes |

| minikube status | —– | | —– |

| kubectl version | display version |

| kubectl get pod (-o wide) | check pods (wide) |

| kubectl get services | check services |

| kubectl create deployment NAME –image=image | create deployment with pod in it |

| kubectl get deployment [name] (-o yaml) | check depooyment (3rd part, config status) |

| kubectl get replicaset | show replicas |

| kubectl edit deployment [name] | edit config file |

| kubectl logs [pod name] | for debagging |

| kubectl describe pod [pod name] | for debagging, more info |

| kubectl exec -it [pod_name] — bin/bash | get pod terminal |

| kubectl delete deployment [name] | rm pod |

| kubectl apply/delete -f [file_name] | create or delete deployment from config file (.yaml) |

### config.yaml ### example ###

### eatch file is made of 3 parts ###

### metadata: ...names

### specification: ...any kind of config. attributs will be specific to the kind

### status: ...will be automatically generated by kubernates

###++++++++++++++++####

#### yaml file iz very strict about indentation - sintax ###

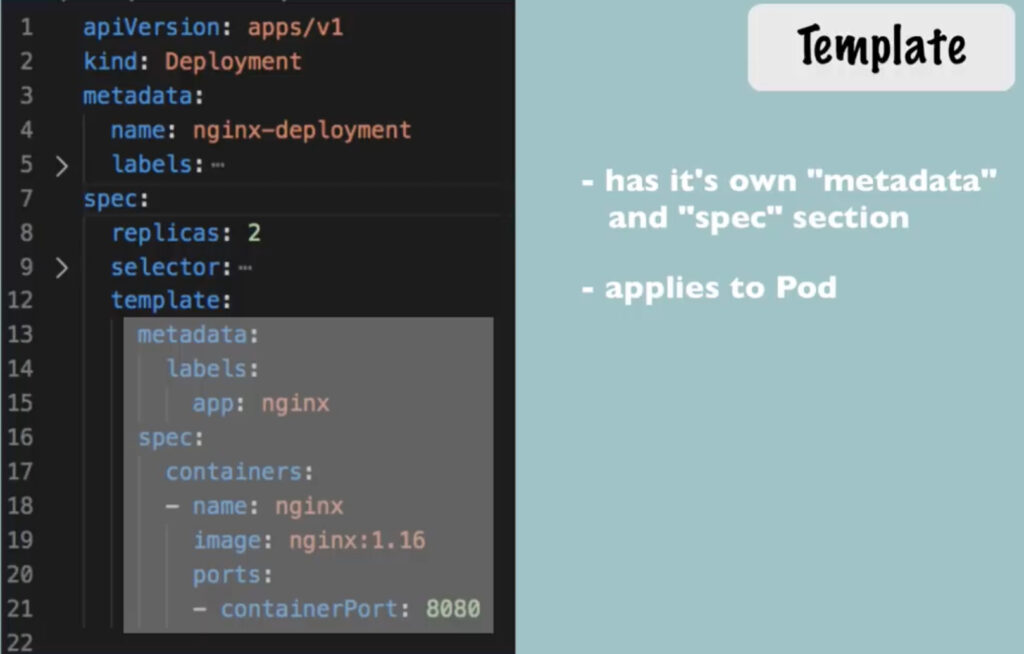

### tamplate: has its own metadata and spec. apply to pod ###

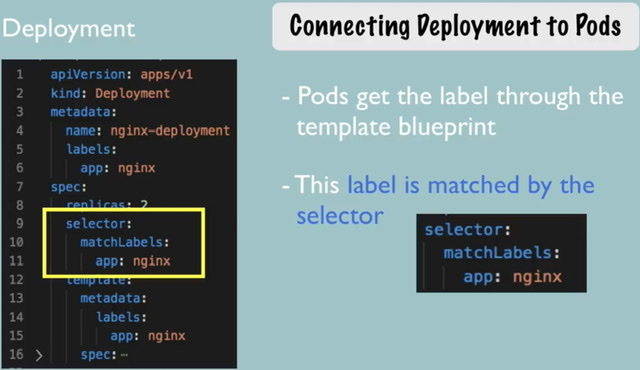

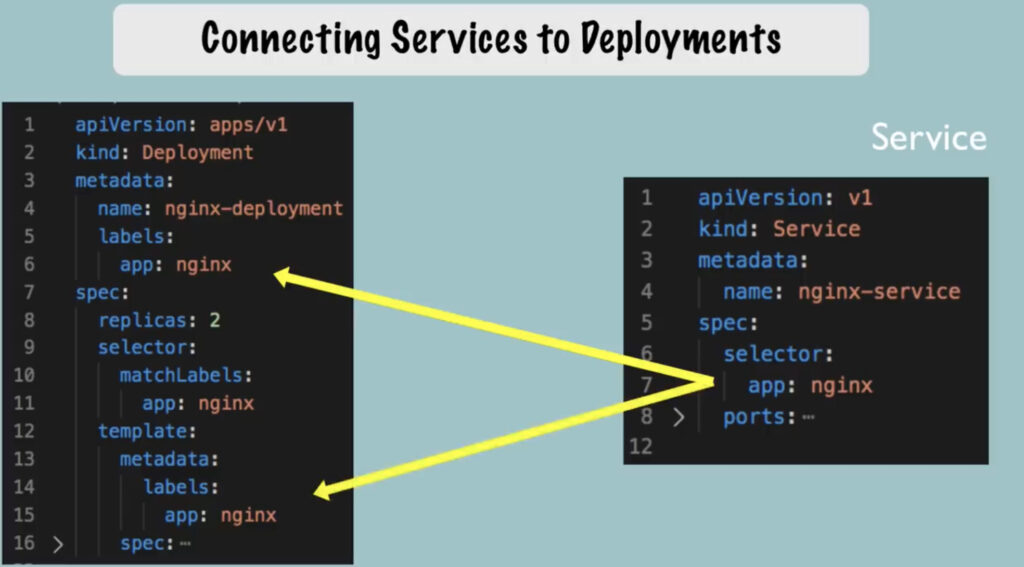

### Connection is established using labels and selectors ###

apiVersion: apps/v1

kind: deployment

metadata:

name: nginx-dpl

labels:

app: nginx

spec: ##### spec for deployment

replicas: 1

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec: ##### spec for pod

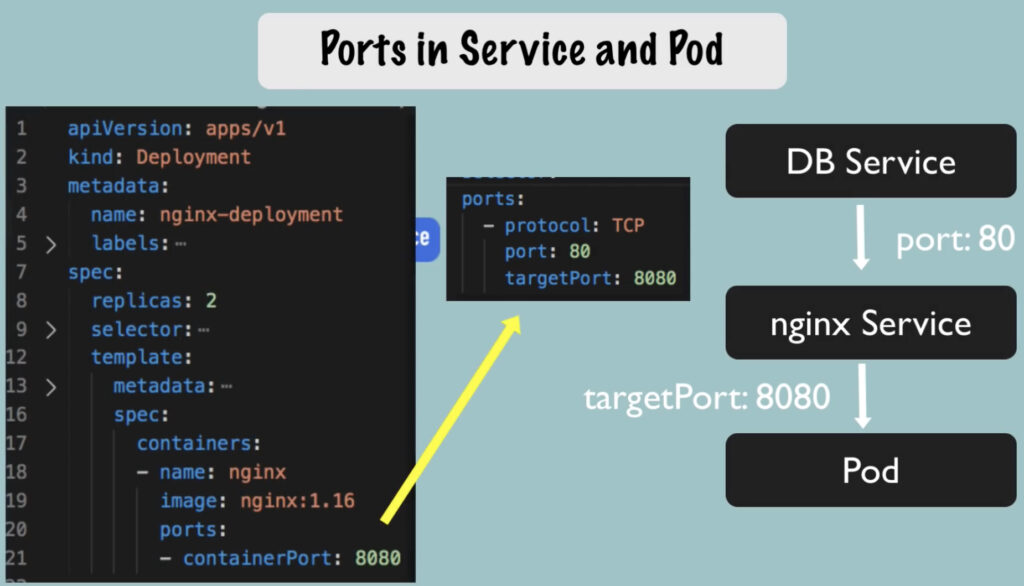

containers:

- name: nginx

image: nginx:1.16

ports:

- containerPort: 80

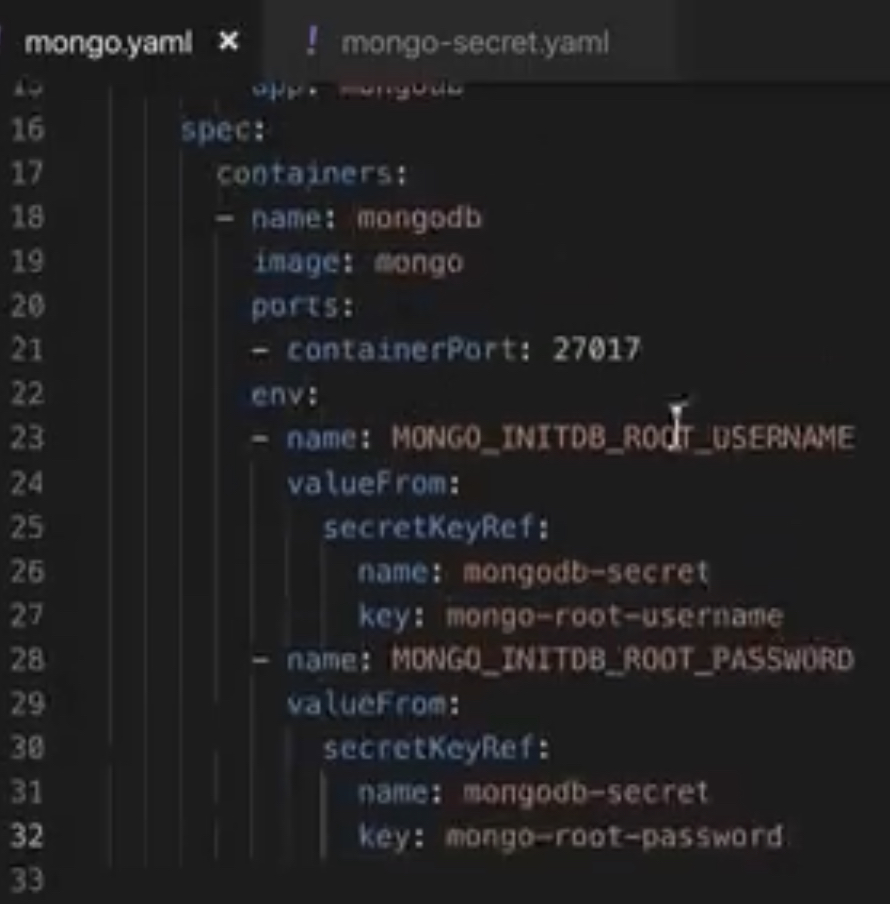

Set Secret

### --- separate config (multiple conf in one file possible) ###

### deployment and service yaml goes in one file ###### ConfigMap... external conf ###

apiVersion: v1

kind: ConfigMap

metadata:

name: mongodb-comfigmap

data:

databese_url: mongodb-service ### name of the service is URL

---

#### way to reference it..###

env:

- name: ME_CONFIG_MONGODB_SERVER

valueFrom:

configMapKeyRef:

name: mongodb-configmap

key: database_url

### externenal service for mongo UI ####

apiVersion: v1

kind: service

metadata:

name: mongo-express-service

spec:

selector:

app: mongo-express

type: LoadBalancer ### this make it external

ports:

- protocol: TCP

port: 8081

targetPort: 8081

nodePort: 30000 ### port for external IP you need to put in browser, 30000-32767| minikube service [name of th service] | to get external IP |

| kubectl cluster-info | show cluster info |

Namespaces ### virtual cluster inside cluster, organise resources

cubectl get namespace ### 4 default namespaces

default ### resources you make, goes here

kube-node-lease ### each node has associated lease

kube-public ### publicely accessible data

kube-system ### do NOT modify, for system use only

kubernates-dashboard ### minikube only

######################################

config file

#############

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql-cfgmap

namespace: my-namespace

data:

db_url: mysql-service.database ### database is namespace

###############################

# each namespace must define own ConfigMap

# same for secret

# service can be shared beetween namespaces

# volumes and nod can not be namespaced| kubectl create namespace [namespace_name] | create new namespace |

| kubectl get configmap -n [my_namespace] | show configmap of specific namespace |

| cubens [my_namespace] | change default ns, cubectx has to be installed first. |

Ingress – external access via domain (no ports)

apiVersion: v1

kind: service

metadata:

name: myapp-ext-service

spec:

selector:

app: myapp

type: LoadBalancer

ports:

- protocol: TCP

port: 8080

targetPort:8080

nodePort: 35010 ### public IP port

########################################

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadate:

name: myApp-ingress

spec:

rules:

- host: myapp.com ### entrypoint for end user

http: ### not browser http

paths: ### routing to internal service, url path

- backend:

serviceName: myapp-ext-service

servicePort: 8080 ### internal service port

Ingress controler, another pod that must be installed on nod to make ingress works. it manages redirections. k8s nginx ingress controler is from kubernates, but there are others.

| minikube addons enabled ingress | starts and configure nginx ingress controler in minikube |

| kubectl get pod -n kube-system | check if it is running |

multiple paths with the same host

apiVersion: networking.k8s.io:v1beta1

kind: Ingress

metadata:

name: multi_path

annotations:

nginx.ingress.kubernates.io/rewrite-target: /

spec:

rules:

- host: myapp.com

http:

paths:

- path: /analytics

backend:

serviceName: analytics-service

servicePort: 3000

- path: /shopping

backend:

serviceName: shopping-service

servicePort: 5000

###########################

### or with subdomains ###

##########################

apiVersion: networking.k8s.io:v1beta1

kind: Ingress

metadata:

name: subdomains

annotations:

nginx.ingress.kubernates.io/rewrite-target: /

spec:

rules:

- host: analytics.myapp.com

http:

paths:

backend:

serviceName: analytics-service

servicePort: 3000

- host: shopping.myapp.com

http:

paths:

backend:

serviceName: shopping-service

servicePort: 5000

TLS Cert

apiVersion: networking.k8s.io:v1beta1

kind: Ingress

metadata:

name: tls-cert-example

spec:

tls:

- hosts:

- myapp.com

seceretName: myapp-tls-cert

rules:

- host: myapp.com

http:

paths:

- path: /

backend:

serviceName: myapp-internal-svc

servicePort: 8080

###################

# tls secret #####

###############

apiVersion: v1

kind: secert

metadata:

name: myapp-tls-cert

namespace: default

data:

tls-crt: base64 encoded cert

tls-key: base64 encided key

type: kubernates.io/tls ### have to be this typeHelm – package manager for kubernates – package YAML files

Helm charts – bundle of YAML files

HELM is templating engine also

| helm search <whatever-you-need> | |

| helm install <chart-name> | tamplate files will be filled from value.yaml |

| helm install –values=my-values.yaml <chartname> | values injection into tamplate files |

Volumes – – 3 components

- Persistent volume – – PV

- Persistent volume Claim – – PVC

- Storage Class – – SC

it can be NFS server, Local disk or cloud. They are NOT namespaced. They are accessible from all namespaces. DB always use remote storage.

#### NFS example ####

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-name

spec:

capacity:

storage: 20Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

persistenVolumeReclaimPolicy: Recycle

storageClassName: slow

mountOptions:

- hard

- nfsvers=4.0

nfs:

path: /dir/path/on/nfs/server

server: nfs-server-ip-address

#### Google Cloud example ####

##############################

apiVersion: v1

kind: PersistentVolume

metadata:

name: test-vol

lebels:

failure-domain.beta.kubernates.io/zone: us-centrall-a__us-centrall-b

spec:

capacity:

storage: 400Gi

accessModes:

- ReadWriteOnce:

gcePersistentDisk:

pdName: my-beta-disk

fsType: ext4

##### Local Storage Example #####

#################################

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-example

spec:

capacity:

storage: 40Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce:

persistenVolumeReclaimPolicy: Delete

storageClassName: local-storage

local:

path: /mnt/disks/ssd1

modeAffinity:

required:

nodeSelectorTerms:

- matchExpressions:

- key: kubernates.io/hostname

operator: In

values:

- example-node

#################################

# PVC - Persistent Volume Claim # have to exist in same namespace

#################################

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc-name <-- here you refer to

spec:

storageClassName: manual

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

resources:

request:

storage: 40Gi

____________________________________

# you have to use pvc in pod config #

____________________________________

kind: pod

metadata:

name: my-app

spec:

containers:

- name: my-app

image: nginx

volumeMounts:

- mountPath: "/var/www/html" (volume is monuted into container)

name: mypd

volumes:

- name: mypod

persistantVolumeClaim:

claimName: pvc-name <-- this is reference

Storage Class provisions Volumes dynamically when PVC claims it

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: storage-class-name <--- reference

provisioner: kuernates.io/aws-ebs

parameters:

type: io1

iopsPerGB: "10"

fsType: ext4

#### you have to claim it via PVC ###

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc-name

spec:

accessModes:

- ReadWriteOnce

resources:

request:

storage: 40Gi

storageClassName: storage-class-name <--referenceStateful Set

- Fix Individual DNS name for each pod

- $[pod name].$[governating service domain]

- IP can change, name stays same

Services — static IP for each pod

- ClusterIP – default – internal (node range) cubectl get pod -o wide

- Headless – communicete with 1 specific pod. Use case: Stateful app, like DB. Cluster IP: none. Use DNS

- NodePort – External traffic has access to fix port on each worker node. Instead of ingress (30000-32768 range) just for test. Not production.

- LoadBalancer – external access through cloud provider, extension of NodePort and ClusterIP

kubectl get endpoints ### same name as service ###

### keeps track of which pods are members/endpoints of the service ####DaemonSet – Caluculates how many replicas are needed based on existing nodes. 1 replica per node.

### use pipe for multiline strings and > for one line string###

multilineString: |

svaba tralala

olala itd

i tako sledece nedelje

u isto vremeTags: cubectl, kubernates

Leave a Reply